After a short introduction and high level features comparison in part 1, let’s look at the APIs in action and see how they perform on some images. Make sure to check part 3 for an analysis of the API itself.

We’ll run some images thought the interactive demo sites of each service and list the results

Generic scenes

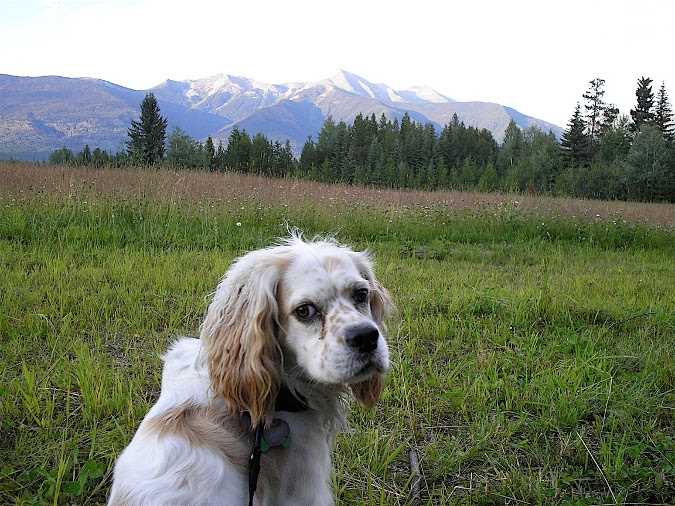

Test 1: Sample from Google demo

Tags

| Microsoft | Watson | |

|---|---|---|

| pet (98%) | outdoor (99%) | animal (100%) |

| dog (98%) | grass (99%) | mammal (100%) |

| mammal (96%) | sky (99%) | dog (98%) |

| animal (95%) | dog (97%) | retriever (65%) |

| vertebrate (89%) | field (92%) | |

| animal (80%) |

Extra features

| Microsoft | Watson | ||

|---|---|---|---|

| Adult | Very Unlikely | NO (0.1%) | N/A |

| Violence | Very Unlikely | N/A | N/A |

| Spoof | Very Unlikely | N/A | N/A |

| Racy | N/A | NO (0.1%) | N/A |

| Category | N/A | animal_dog | N/A |

| Dominant Color 1 | N/A | ||

| Dominant Color 2 | N/A |

No surprises here, all APIs properly detected everything in the image and classified it correctly.

Test 2: Sample from Microsoft demo

Tags

| Microsoft | Watson | |

|---|---|---|

| vacation (95%) | outdoor (99%) | beach (82%) |

| sports (78%) | sky (99%) | person (67%) |

| photo shoot (56%) | woman (96%) | jump(65%) |

| person (94%) | ||

| beach (90%) |

Extra features

| Microsoft | Watson | ||

|---|---|---|---|

| Adult | Unlikely | NO (11%) | N/A |

| Violence | Very Unlikely | N/A | N/A |

| Spoof | Very Unlikely | N/A | N/A |

| Racy | N/A | YES (66%) | N/A |

| Category | N/A | people | N/A |

| Dominant Color 1 | N/A |

Interesting results in the tags of this images. You can certainly spot some key differences in they way the algorithms work behind the scenes. While Microsoft and Watson try to tag exactly what they see woman/person, sky/beach Google tries to get deeper into the meaning of the image and less into what is actually in the image and returns vacation and sports.

Also interesting to note the Racy flag on the Microsoft side and the Unlikely (vs Very Unlikely) Adult content on the Google side.

Test 3: Sample from Watson demo

Tags

| Microsoft | Watson | |

|---|---|---|

| skiing (98%) | outdoor (99%) | ski (100%) |

| sports (98%) | skiing (99%) | sport (79%) |

| ski (92%) | snow (99%) | skiing (73%) |

| alpine skiing (92%) | slope (80%) | snow (71%) |

| piste (90%) | nature (76%) |

Extra features

| Microsoft | Watson | ||

|---|---|---|---|

| Adult | Very Unlikely | NO (0.3%) | N/A |

| Violence | Unlikely | N/A | N/A |

| Spoof | Very Unlikely | N/A | N/A |

| Racy | N/A | NO (10%) | N/A |

| Category | N/A | N/A | |

| Dominant Color 1 | N/A |

Once again all classifiers get it right and correctly detect most of what’s in the image.

Microsoft makes a mistake in the Category field since this is obviously not ocean nor beach.

Test 4: Random image of an office

Tags

| Microsoft | |

|---|---|

| office (89%) | indoor (99%) |

| multimedia (68%) | floor (99%) |

| furniture (65%) | wall (97%) |

| conference hall (60%) | office (97%) |

| headquarters (55%) | desk (94%) |

Interestingly, Watson did recognize anything in this sample, leaving it all to Google and Microsoft which both detected similar items in the image.

Generic scene conclusions

All platforms do well at tagging the images and provide the confidence levels for those tags. I like how Microsoft tries to apply indoor vs outdoor tag to every image.

In terms of extra features, both Google and Microsoft provide scores for adult/mature content and even though they are named and scored differently they tend to agree and be spot on with the detection.

I like Microsoft‘s Category result which tries to classify images in one or 2 categories to make it easier for a developer to make sense of the image. However, that particular field is calculated only based on a taxonomy of 86 elements and is not as accurate as it should be and we’ve seen how it messed up in one of the samples.

While analyzing the JSON responses I found an interesting bonus in the Watson response. All the tags come with a type-hierarchy flag with gives you a hierarchy of the tag it finds: i.e. for the tag dog the hierarchy will be animals/pets/dog

Text recognition

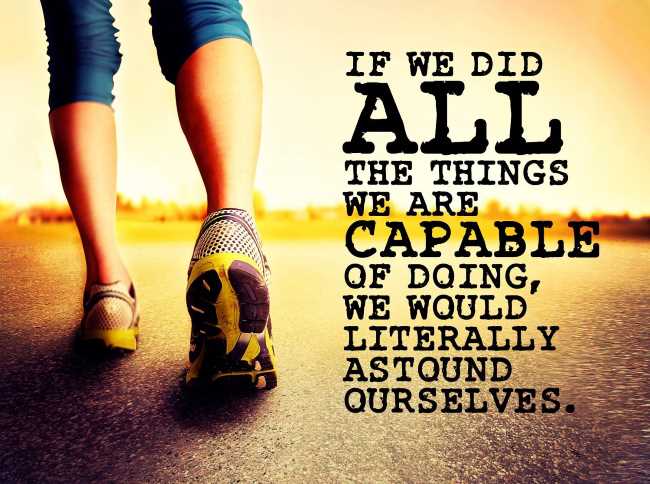

Test 5: Sample from Microsoft demo

Google

Detected language - en

IF WE DID

ALL

THE THINGS

WE ARE

CAPABLE

a F DaING

WE WaULD

LITERALLY

ASTOUND

OURSELVES.

Microsoft

Detected language - en

IF WE DID

ALL

THE THINGS

WE ARE

CAPABLE*

OF DOING,

WE WOULD

LITERALLY

ASTOUND

OURSELVE , “

Watson

[a] we did

gs

capable

[of] doing

[we] would

literal ly

as [found]

ourselves

Watson really struggles in this test, gets a lot of the words wrong and completely messes up the phrase. The fact that it provides confidence at word level at least you will know it failed.

Google misses a couple of words but the phrase is still decipherable, and Microsoft gets everything right - as expected since the image comes from their demo suite.

Test 6: Sample from Watson demo

CAUTION

Garbage Trucks

Entering and

Leaving

Highway

Microsoft

CAUTION

Garbage Trucks

Entering and

Leaving

Highway

Watson

caution

garbage trucks

entering and

leaving

highway

All of them get this correct, with Watson messing up letter capitalization in what I think is more of an API issue rather than detection.

Text test conclusions

All of them recognize words, positions on lines and bounding box on each word.

Watson messed up the Microsoft sample and even on their own sample were the only ones that did not capitalize the words correctly.

Watson is the only one with confidence score attached to each word,

Microsoft fails to provide the entire sentence in one field but has additional properties such as orientation and angle.

Both Microsoft and Google detect language (only English tested).

Faces

Since Google does not provide age/gender detection, it will not be included in the last test.

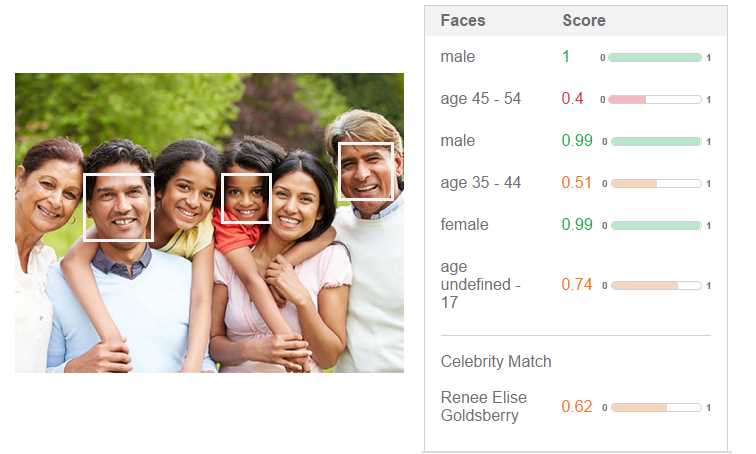

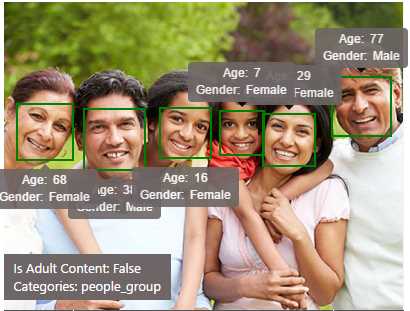

Test 8: Sample from Watson demo

Both correctly recognize the gender and approximate ages (I don’t know the exact ages but it looks right).

Test 9: Sample from Microsoft demo

Microsoft takes the lead in this one (expected that since it’s their own sample) detecting all faces in the picture with correct genders. Again, age accuracy is hard to tell.

Interesting that Watson tries a celebrity match but fails even though the confidence is pretty high (62%).

Test 10: Control image

Final image of the suite, both get the gender right, but Watson gets the age closer than Microsoft (actual age 44). Also Watson correctly identifies the name while Microsoft does not.

Face detection conclusions

All 3 providers return bounding boxes for faces with Google providing references for all facial landmarks and emotion detection within the API, while lacking age and gender.

As with everything else, Watson gives confidence score, for each detection which is really nice and is the only API that provides that. Does not try to guess exact age, but rather an interval.

None of them is particularly good at detecting age in the control sample.